The Ups and Downs of Recovery at The Forge

Talk More, Tech Less: Digital Wellness Tips From Dawn Wible

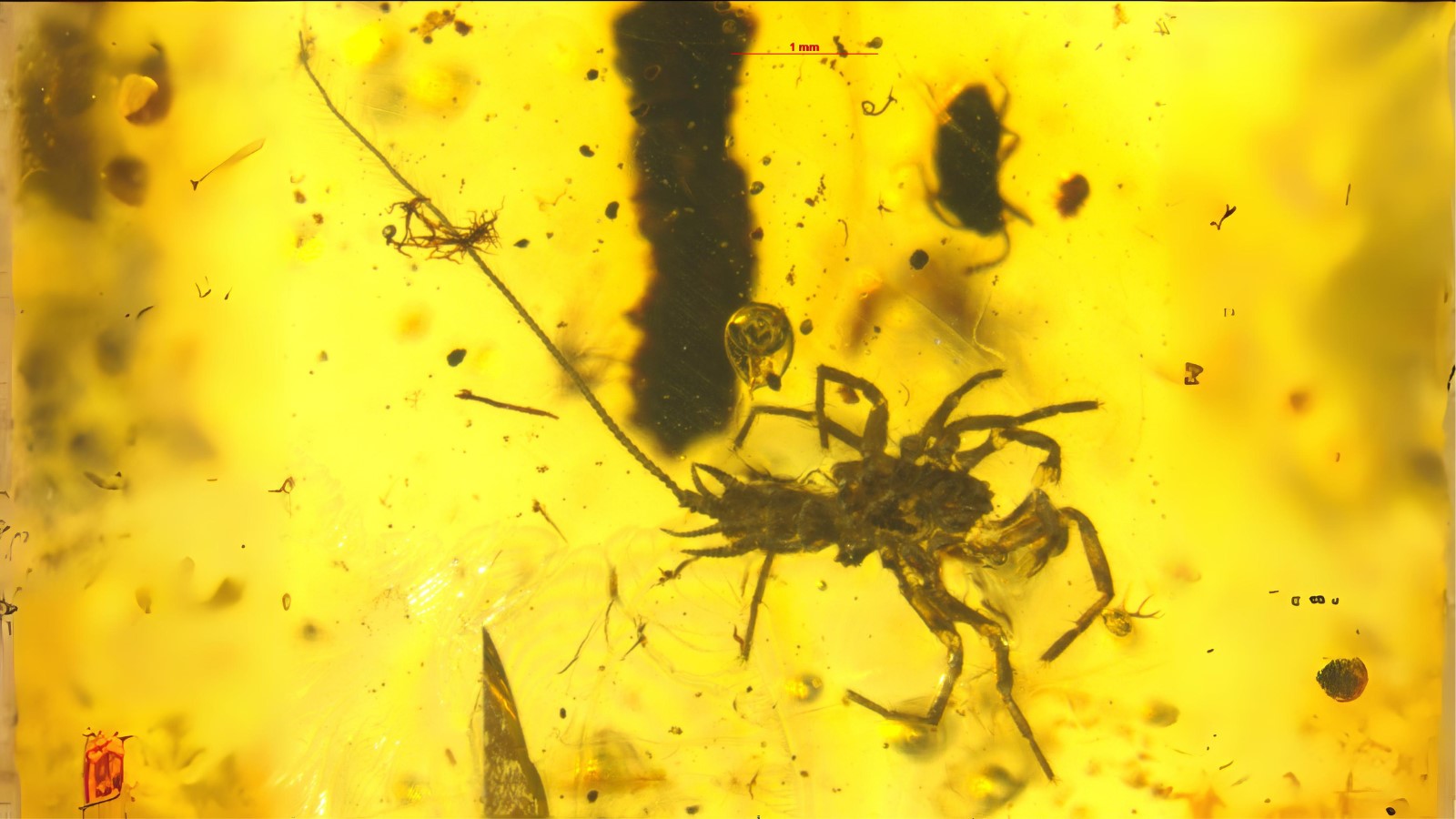

Unraveling the Mess of Arachnid Phylogeny

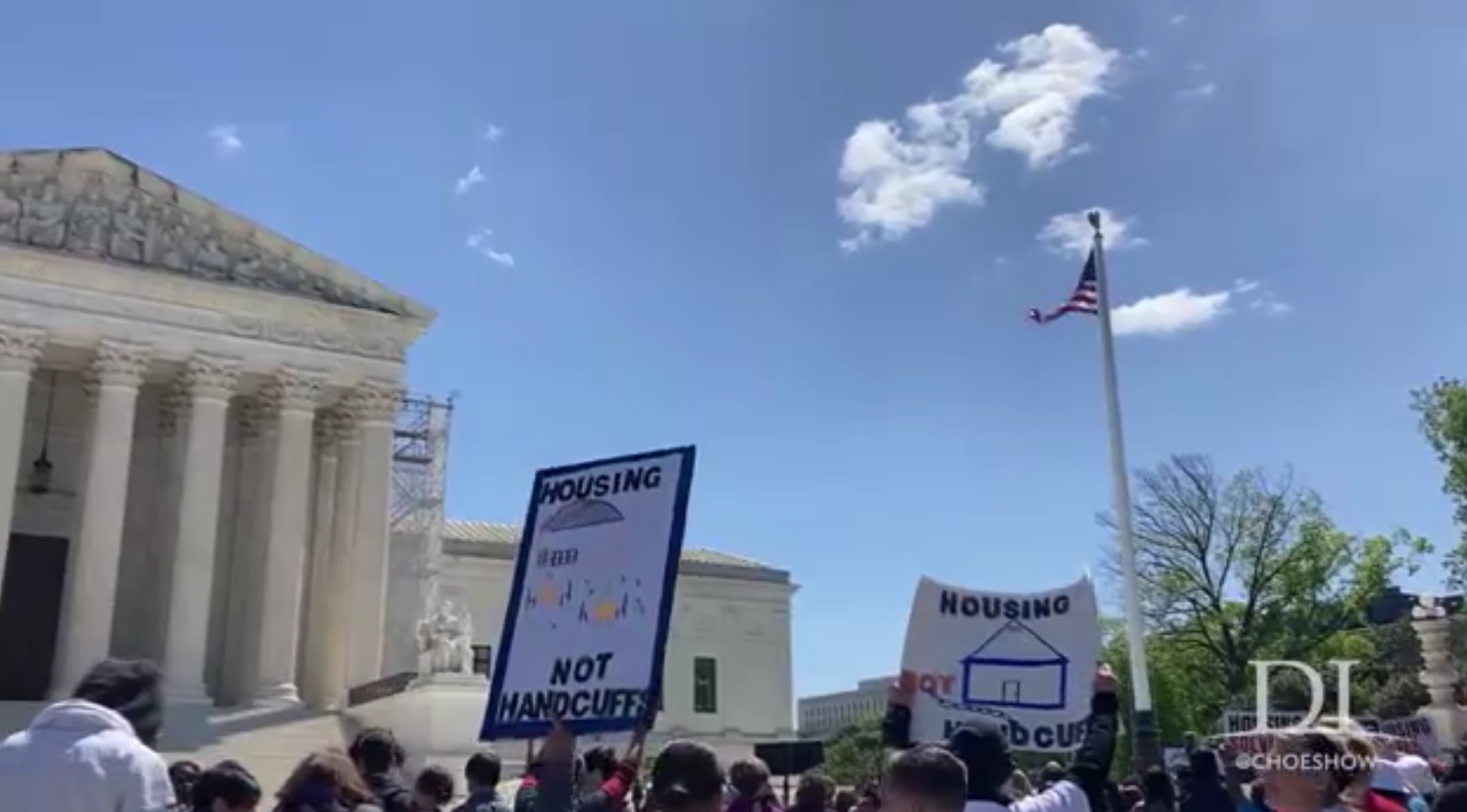

The Dirty Little Secret About Homelessness Is the Key to Ending It

The Biden Department of Education Seems to Hate Women and Girls

Supreme Court Hears Arguments in Homelessness Case: Full Analysis

Thomas Linzey on the Nature Rights Movement

Top Ten Cheats in “Monumental” Origin of Life Research