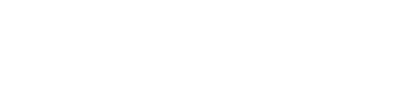

Description

Doomsday headlines warn that the age of “killer robots” is upon us, and that new military technologies based on artificial intelligence (AI) will lead to the annihilation of the human race. What’s fact and what’s fiction? In The Case for Killer Robots: Why America’s Military Needs to Continue Development of Lethal AI, artificial intelligence expert Robert J. Marks investigates the potential military use of lethal AI and examines the practical and ethical challenges. Marks provocatively argues that the development of lethal AI is not only appropriate in today’s society—it is unavoidable if America wants to survive and thrive into the future. Dr. Marks directs the Walter Bradley Center for Natural and Artificial Intelligence at Discovery Institute, and he is a Distinguished Professor of Electrical and Computer Engineering at Baylor University. This short monograph is produced in conjunction with the Walter Bradley Center for Natural and Artificial Intelligence, which can be visited at centerforintelligence.org.

Plaudits

This book is a succinct, well-reasoned, detailed and provocative voice in one of the most important conversations of our time. It should be read by anyone with an interest in the moral and social implications of AI.

Donald C. Wunsch II, PhD, Mary K. Finley Missouri Distinguished Professor of Computer Engineering, Missouri University of Science and Technology; Director, Applied Computational Intelligence Laboratory, Missouri University of Science and Technology

Science fiction-fed fears of killer robots ‘waking up’ and taking over the world prevent us from facing this basic fact: Bad guys have a say in what the world is like. A decision not to develop AI for defense is a choice to allow our most vicious enemies to develop superior weaponry to threat- en and kill the innocent. It also means our weapons will be more rather than less likely to harm and kill non-combatants. In this vitally impor- tant book, Robert Marks makes a lucid and compelling case that we have a moral obligation to develop lethal AI. He also reminds us that moral questions apply, not to the tools that we use to protect ourself, but to how we use them when war becomes a necessity.

Jay Richards, PhD, Research Assistant Professor, Busch School of Business, The Catholic University of America; author, The Human Ad- vantage: The Future of American Work in an Age of Smart Machines